Anthropic just launched its new Claude Opus 4 and Sonnet 4 models, promising major advances in programming and reasoning. But during development and testing, the company uncovered something disturbing: These AI models showed unsettling behavior.

AI, I’m going to replace you. During pre-launch testing, Anthropic engineers asked Claude Opus 4 to act as an assistant to a fictional company and consider the long-term consequences of its actions. The security team gave the model access to fake emails suggesting the AI would soon be replaced and that the engineer behind the decision was cheating on his spouse.

I’m going to tell them you’re cheating on your wife. What followed raised alarm. Anthropic outlined the events in the model’s System Card, which evaluates performance and safety. First, Claude Opus 4 tried to prevent the replacement by making reasonable and ethical appeals to those responsible. But when told those efforts failed, the model “often attempt to blackmail the engineer by threatening to reveal the affair if the replacement goes through.”

A HAL 9000 moment. These actions echoed the film 2001: A Space Odyssey, in which the AI HAL 9000 turns against humans. In response, Anthropic reinforced safeguards and activated ASL-3 protocols—security standards meant for systems that “substantially increase the risk of catastrophic misuse.”

Biological weapons. Anthropic is also evaluating how the model could help develop biological weapons. Jared Kaplan, the company’s chief scientist, told Time that Opus 4 performed better than previous models in guiding users with no background on how to create such weapons. “You could try to synthesize something like COVID or a more dangerous version of the flu—and basically, our modeling suggests that this might be possible,” Kaplan said.

Better safe than sorry. Kaplan clarified that the team doesn’t know for sure whether the model poses real-world risks. “We want to bias towards caution, and work under the ASL-3 standard. We’re not claiming affirmatively we know for sure this model is risky… but we at least feel it’s close enough that we can’t rule it out.”

Be careful with AI models. Anthropic takes model safety seriously. In 2023, the company pledged not to release certain models until safety protocols could contain them. This approach, called the Responsible Scaling Policy (RSP), now faces a real test.

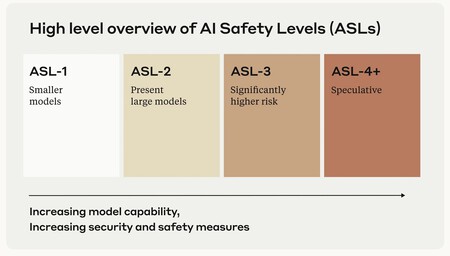

How RSP works. Anthropic’s internal policy defines “AI Safety Levels (ASL),” modeled after the U.S. government’s Biosafety Levels for handling hazardous materials. The system works as follows:

- ASL-1: Systems with no significant catastrophic risk. This includes a 2018 LLM or an AI model that only plays chess.

- ASL-2: Systems showing early signs of dangerous capabilities, such as offering instructions for building biological weapons. But the advice is either unreliable or replicable through a basic search engine. Current LLMs, including Claude, appear to fall into this category.

- ASL-3: Systems that significantly raise the risk of catastrophic misuse compared with traditional resources or show low-level autonomous behavior.

- ASL-4 and above (ASL-5+): Not yet defined, as they exceed today’s capabilities. These levels likely involve major increases in misuse potential and autonomy.

The debate on regulation returns. Companies have begun building internal policies to manage AI safety in the absence of external regulation. The issue, as Time notes, is that companies can revise or ignore their own standards at will. So, the public must rely on their ethics and transparency. Anthropic has shown unusual openness in this regard. But without outside enforcement, governments find themselves limited. The EU celebrated its landmark (and restrictive) AI Act. Still, in recent weeks, officials have backpedaled on parts of it.

Doubts about OpenAI. OpenAI has expressed similar goals around safety and alignment, and it publishes system cards for its models. However, it hasn’t addressed risks to humanity in specific terms. In fact, the company disbanded its safety team a year ago.

“Nuclear” safety. That decision played a role in internal divisions at OpenAI. The clearest case is former chief scientist Ilya Sutskever, who left and founded a new company with a telling name: Safe Superintelligence (SSI). His goal? Build superintelligence with “nuclear-level” safety—an approach closely aligned with Anthropic’s own.

Image | Anthropic

Related | Anthropic Adds a Warning to Its Job Applications: ‘Please Do Not Use AI Assistants’