Google’s journey with smart glasses is far from over. In fact, it’s just beginning. After years of silence, the company has reintroduced new models with a revival of a familiar name: Project Aura. Google has used this name before. It was during the era of Google Glass, which ultimately became a footnote in the history of technology. However, this time the approach is completely different.

At Google I/O 2025, the company showed off the development progress of its new mixed reality glasses. In collaboration with Xreal, this initiative aims to seamlessly integrate the Android experience into the XR universe with natural responses in real-time and meaningful context.

A live demo. At the event, Shahram Izadi, head of Google’s Android XR division, went on stage and asked the audience: “Who’s up for seeing an early demo of Android XR glasses?” The response came from behind the scenes as Nishtha Bhatia, a product manager of Glasses and AI at Google, appeared on stage remotely to demonstrate the glasses in action.

Google displayed an interface in real time over the environment. Using the integrated camera, the glasses revealed what was in front of Bhatia as she received messages, played music, consulted directions, and interacted with Gemini. She did it all through voice commands, without needing to pull out her phone or press any buttons.

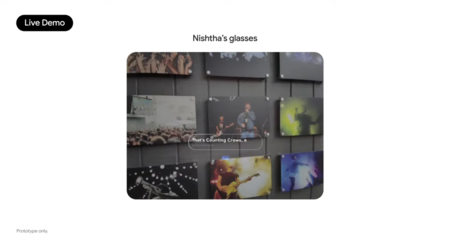

In one of the most impressive moments of the demo, she asked about the band in a photo. Gemini provided an answer, though there was a slight delay attributed to connection issues. She then requested that a song by the band be played on YouTube Music, and it began to play without any manual interaction. This interaction was captured and shared in real time.

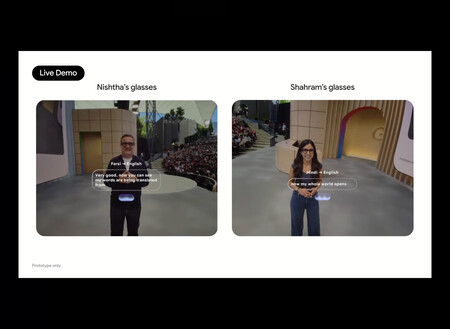

Live translation and a minor glitch. The final test involved a conversation between Izadi and Bhatia, with Izadi speaking in Hindi and Bhatia in Farsi. The Gemini-powered glasses provided simultaneous translation with voice interpretation. Although the system functioned correctly for a few seconds, the team decided to interrupt the demonstration when it noticed a glitch.

Despite the minor setback, the message was clear: Google wants to reenter the smart glasses market. This time, it plans to do so with a more robust foundation supported by its ecosystem of services, Gemini, and partnerships with key players in the XR space. The current approach focuses on practical, real-time experiences, without unnecessary complications or long-term promises.

Images | Google

Related | There’s a Booming Market Where Meta Is Beating All Its Competitors: Smart Glasses