On May 20, Google launched Gemini 2.5 Pro and Gemini 2.5 Flash in preview. These new AI models are the company’s most advanced yet, and Google backed its announcement with several comparative charts and tables to prove it.

The data showed that both models outperformed competitors in reasoning and traditional performance metrics such as math and programming benchmarks. But Google also highlighted another standout feature: the price of Gemini 2.5 Flash.

In its published table, Google showed that Gemini 2.5 Flash offered the best price-to-performance ratio. However, this example is the exception, not the rule. In the broader race to develop cheap and powerful AI models, China appears to be leading.

At Xataka On, we analyzed the cost of using these models based on API access, which developers use to integrate AI models into their apps and services—not just end-user subscription prices.

API pricing distinguishes between two types of token usage:

- Input tokens refer to the data sent to the model for processing.

- Output tokens refer to the text generated in response.

Typically, input tokens are about five times cheaper than output tokens, since generating a response consumes significantly more computational power. We compared the API costs of major models from the U.S. and China. Although the comparison didn’t include every model available, all included are currently active and relevant. Here’s what we found:

While U.S. model prices (OpenAI, Anthropic, and Google) are public and easy to locate, prices for Chinese models (DeepSeek, Qwen by Alibaba, Doubao by ByteDance, GLM-4 by Zhipu, and Ernie by Baidu) are harder to access.

Still, when sorted from cheapest to most expensive, the data shows Chinese models are consistently more affordable. Only Gemini 2.5 Flash Preview from Google competes on cost—and does so exceptionally well. In all other cases, Chinese-developed AI models are the most cost-effective.

However, as with all comparisons, context matters. The table doesn’t factor in each model’s performance. Models like OpenAI’s o3 and Anthropic’s Claude Opus 4 are their creators’ most advanced offerings—highly accurate but resource-intensive, resulting in higher costs.

These models serve specific use cases that require detailed, nuanced understanding. For many standard tasks, however, such power is unnecessary. That’s where models like DeepSeek R1 and Gemini 2.5 Flash Preview offer a better price-to-performance balance.

Models With Variable Prices

The price war has also prompted companies to adopt two dynamic pricing strategies:

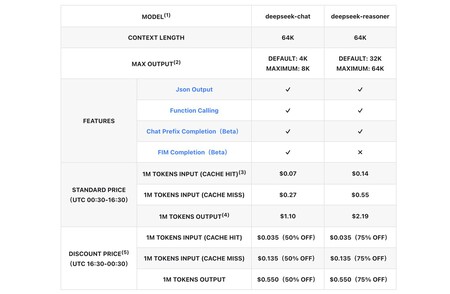

- Cached vs. non-cached inputs. A “normal” input is processed entirely by the model. But if the same input has been used before (a cache hit), the system can retrieve a cached response, cutting computational costs. DeepSeek, Google, Anthropic, and OpenAI all support this feature.

- Time-based pricing. Some platforms offer lower rates based on usage time. DeepSeek, for example, has separate rates for “day” and “night,” using UTC time.

DeepSeek API prices: Rates may be lower depending on the time of day, as shown in the bottom left corner. Source: DeepSeek.

DeepSeek API prices: Rates may be lower depending on the time of day, as shown in the bottom left corner. Source: DeepSeek.

Good News: AI Is Getting Cheaper

As China and the U.S. battle over who can build the most powerful or affordable models, AI prices are plummeting.

Several experts have pointed this out. Ethan Mollick, a professor at the University of Pennsylvania, recently emphasized how the price-to-performance ratio keeps improving. AI is getting better—and cheaper.

Raveesh Bhalla, a former Netflix and LinkedIn executive, reported that the cost of an o1-level model dropped 27-fold in just three months. At this pace, GPT-4-level models—considered state-of-the-art a year ago—could become 1,000 times cheaper within 18 months.

We’re already seeing this shift. At a September conference, OpenAI’s Dane Bahey noted that the cost per million tokens had dropped from $36 to just $0.25 in 18 months. That trend continues—and it benefits users.

So, the race is far from over. China may currently lead in cost, but performance still matters. Benchmarks show that Chinese models can compete with top-tier U.S. offerings. The question now is who will ultimately come out on top.

For now, one thing is clear: Users are the real winners, gaining access to better, faster, and cheaper AI models every day.

Image | aboodi vesakaran (Unsplash)

Related | Chinese Companies Want to Lead the AI Race by Offering Free Services That Others Charge For