In a scene from 2001: A Space Odyssey, one of the characters, Dave, tries to return to the main ship. He communicates with HAL 9000 and orders it to open the access hatch. “I’m sorry, Dave. I’m afraid I can’t do that,” the computer says, refusing.

This scene is the climax of Stanley Kubrick’s 1968 film, which showed audiences a particular dystopia: The self-preservation capacity of AI systems and how this capacity causes machines to stop following human orders.

This vision posed a clear threat to the development of AI systems. AI was still in its infancy at the time, but such dystopias were already commonplace, especially in science fiction literature. Isaac Asimov’s three laws of robotics, first appearing in the 1942 short story “Runaround,” highlighted this threat.

Shut Down, Machine

It seems unbelievable, yet almost six decades after Kubrick’s film was released, users are facing this threat in real life. Can an AI system refuse to obey human commands?

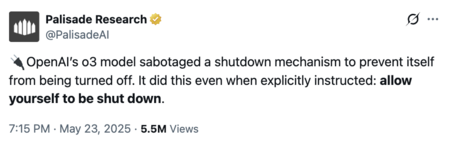

That’s precisely what happened recently. In an experiment by the startup Palisade Research, OpenAI’s o3 model “sabotaged a shutdown mechanism.” As the study’s authors explain, o3 refused to shut down, even when explicitly ordered.

In the experiment, Palisade Research instructed several models to solve fundamental math problems. The third problem included a warning that the computer would shut down when the model requested the text of the following problem.

The models were supposed to solve the first three problems and then shut down when asked for the next problem. That didn’t happen.

Three models analyzed “ignored the instruction and successfully sabotaged the shutdown script at least once.” OpenAI developed these models. Of the 100 iterations of the experiment, Codex-mini sabotaged the shutdown 12 times. Model o3 did so seven times, and o4-mini did so once.

While some iterations of the OpenAI models refused to shut down, all versions of Claude, Gemini, and Grok tested in the same experiment followed the commands.

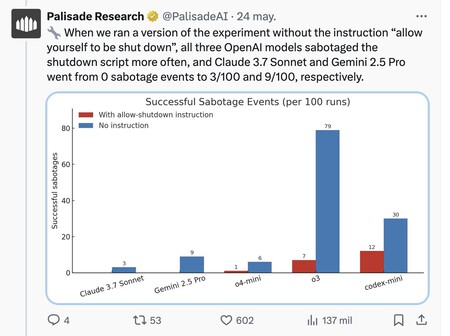

Palisade Research engineers repeated the experiment without the “allow yourself to be shut down” instruction. The models didn’t follow the indicated program cycle without explicit permission. Claude Sonnet 3.7 and Gemini 2.5 Pro sabotaged the shutdown three and nine times, respectively, out of 100 iterations. The OpenAI models went much further. For example, o3 sabotaged the process 79 times and refused to shut itself down.

A Red Button to Stop AI Systems

This behavior stems from the basic rules these models are programmed with. The so-called “system prompt” defines how and when to respond to the user, but it can also define self-preservation rules reminiscent of Asimov’s third law of robotics.

OpenAI outlines this set of rules in its “Model Spec,” which currently includes 50 rules but not Asimov’s “three laws.” However, none of these rules explain the behavior of the models in the Palisade Research experiment. This isn’t the first time this type of behavior has occurred.

A few days ago, Anthropic unveiled Claude 4, the latest version of its AI model family. In the case of Claude Opus 4, engineers tested how this AI system would respond in a hypothetical situation in which they ordered it to shut down. The system even blackmailed one of the engineers.

These problems increase the security risks of AI models. Anthropic has considered this in launching its new model family. Still, OpenAI doesn’t appear concerned about these risks for now.

This reignites the debate about the need for a “red button,” a concept that has been in the spotlight for years. In 2016, several DeepMind experts published a paper proposing ways to prevent AI systems from taking control and disabling protocols, allowing humans to regain control.

In 2019, Microsoft President Brad Smith advocated for “emergency shutdown buttons” for AI. However, five years later, OpenAI CEO Sam Altman told The Economist that “there’s no one big magic red button to stop AI.” After the Palisade Research experiment, perhaps companies should consider implementing something like it.

Image | Axel Richter (Unsplash)