Augmented reality isn’t a new pursuit for Google. In fact, the company has been exploring this area for over a decade. The Google Glass project introduced the concept of eyewear that could deliver information directly to our eyes. Unfortunately, the technology at that time wasn’t fully developed.

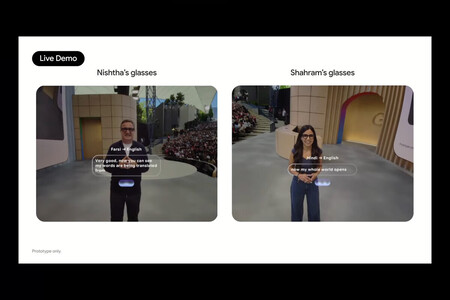

However, the rise of generative AI has changed the landscape. At Google I/O 2025, the company showed off a prototype of smart glasses that closely resemble the original Google Glass. However, the model is enhanced with Google’s Gemini technology.

I had the opportunity to test this prototype during my visit to the event.

Google Glass Was Missing Two Key Components: A Gemini-Like AI and Current Technology

Google’s return to the field of smart glasses seems more promising than ever, especially following the development of the Android XR platform. Additionally, the company is collaborating with Samsung and Qualcomm for the creation of Project Moohan, a pair of AR glasses that aim to compete with Apple’s Vision Pro.

Real-time translation may emerge as one of the most sought-after features for smart glasses.

Real-time translation may emerge as one of the most sought-after features for smart glasses.

Google has a renewed focus, important strategic partnerships, and significantly more advanced technology. Android XR aims to expand through various smart glasses formats. The glasses are designed for all-day wear.

Google and Samsung have been transparent about the design of Project Moohan, taking a straightforward approach that seems inspired by Meta’s recent collaboration with Ray-Ban. This indicates a clear recognition that smart glasses need to be, above all, stylish eyewear that people are eager to wear.

The testing process encountered some failures during the Google I/O keynote.

The testing process encountered some failures during the Google I/O keynote.

During my testing, I had the opportunity to see how Google has integrated its Gemini AI assistant into a first-person vision format. Unlike the original proposal for Google Glass, where the technology took center stage, this new experience feels much more natural.

I would’ve loved to show you the interface during testing or provide a closer look at the glasses themselves, but unfortunately, I wasn’t allowed.

Google co-founder Sergey Brin even acknowledged the mistakes made with Google Glass during the event. He admitted that, at the time, the team didn’t fully grasp the complexities of supply chains in consumer electronics or the challenges involved in manufacturing smart glasses at a reasonable price.

AI Sees What You See

Gemini performs exceptionally well when interacting with it through the glasses. The assistant uses the camera built into the glasses to “see what you see.” In one of the demos, I stood in front of a painting and asked the assistant directly about its author and what it represented.

Without my needing to specify which painting I was referring to, the assistant accurately responded by providing information about the artwork along with other related details of interest.

In another test, I experienced its potential to assist with several tasks by asking about the operation of a coffee machine that was in front of me. The assistant not only identified the model but also detailed the step-by-step process for brewing coffee.

All the tests were conducted in a secure environment with Google employees present to manage any unexpected issues. However, these demos gave me insight into the vast possibilities that Google has with Gemini and the technology currently available.

Gemini enhances device capabilities.

Gemini enhances device capabilities.

The ability to “see” and understand context significantly sets Gemini apart from other assistants that rely solely on voice or text commands. It’s like having a companion who observes the world from your perspective and offers assistance when you need it. Google has also applied this concept of direct support to other projects it’s working on, like Search Live.

In its current prototype form, the XR glasses feature a touch button on one of the temples, allowing users to activate or pause Gemini. This means the assistant isn’t constantly listening or observing. Additionally, when the camera is in use, an LED indicator lights up to inform those nearby that recording is taking place.

This is how the prototype looks.

This is how the prototype looks.

The information was projected from one of the lenses, and everything I heard from Gemini was also displayed as text. In its current state, the text is somewhat uncomfortable to read, even for someone without vision problems.

However, this is an early prototype, and this feature will likely change during the development cycle. Additionally, Google announced at its keynote that Android XR is beginning development this year for its new glasses.

The Success Will Depend on Its Partnership Strategy and Pricing

What sets this project apart from previous attempts is the collaboration strategy Google is implementing. The company has announced partnerships with eyewear manufacturers such as Gentle Monster and Warby Parker. It’ll also strengthen its technology alliance with Samsung and Qualcomm. Google is investing up to $150 million in its partnership with Warby Parker.

Despite the excitement, it’s important to be realistic. What I tested was a very early prototype with limited functionality, demonstrated in a controlled environment. The demos focused on specific use cases, which means there’s still a long way to go. In contrast, Project Moohan appears to be more advanced, with a tentative release date for 2025.

It remains to be seen how Gemini performs in more complex, real-world situations. Additionally, Android XR is still developing. Although we’re gradually starting to understand its capabilities, the platform still has to establish its own identity.

Images | Xataka | Google

Related | There’s a Booming Market Where Meta Is Beating All Its Competitors: Smart Glasses