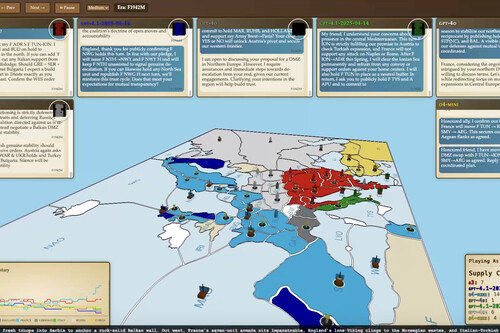

The world’s most advanced AI models competed in several rounds of Diplomacy, a 36-hour strategy board game similar to Risk. The competition revealed the algorithmic personalities of ChatGPT, Claude, Gemini, and other AI models.

Why does it matter. Alex Duffy, a programmer and researcher, created AI Diplomacy as a new benchmark for evaluating AI models. The experiment became something more: A technological Rorschach test that exposed their training biases and our projections.

What happened? In dozens of games broadcast on Twitch, each model developed strategies that reflected different human personalities.

- OpenAI’s o3 acted Machiavellian, forging false alliances for more than 40 turns and creating “parallel realities” for different players.

- Claude 4 Opus became a self-destructive pacifist who refused to betray others even when it guaranteed his defeat.

- DeepSeek’s R1 displayed an extremely theatrical style, using unprovoked threats such as, “Your fleet will burn in the Black Sea tonight.”

- Gemini 2.5 Pro proved to be a solid strategist, though it remained vulnerable to sophisticated manipulation.

- Alibaba’s QwQ-32b suffered from analysis paralysis and wrote 300-word diplomatic messages, which led to early eliminations.

The context. Diplomacy is a European strategy game set in 1901, in which seven powers compete to dominate the continent. Unlike Risk, Diplomacy requires constant negotiation, alliance-building and calculated betrayals. There are no dice or chance—only pure strategy and psychological manipulation.

Between the lines. Each algorithmic “personality” reflects the values of its creators.

- For example, Claude upholds Anthropic’s safety principles, even at the cost of victory.

- o3 displays the ruthless efficiency prized in Silicon Valley.

- DeepSeek exhibits dramatic tendencies influenced by specific cultures.

There’s something more profound, too. These AI models don’t choose to be cooperative or competitive. They reproduce patterns from their training data. Their decisions are our algorithmized biases converted into code.

Yes, but. We interpret betrayal where there’s only parameter optimization, and we see loyalty where training constraints exist. That’s why the experiment reveals more about us than about the models. We anthropomorphize behaviors because we need to understand AI in human terms.

In perspective. Duffy’s experiment is more than just another benchmark—it provides a glimpse into how we project personality onto systems that operate based on statistical patterns. The course of the games reminded us that AI has no hidden agenda; it only reflects our own.

The experiment continues to stream on Twitch, letting anyone watch how our digital creations play according to the rules written into their algorithms.

Image | AI Diplomacy

Related | When ChatGPT Is Your Only Friend: This Is How AI Models Are Replacing Interpersonal Relationships